Apple researchers have unveiled a groundbreaking model that revolutionizes photo editing by allowing users to describe changes they want in a photo using simple language, eliminating the need for traditional photo editing software.

The MGIE model, developed in collaboration with the University of California, Santa Barbara, empowers users to crop, resize, flip, and apply filters to images through text commands alone.

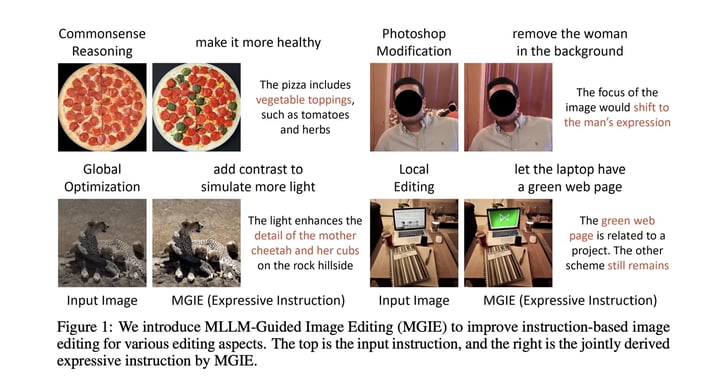

From basic adjustments to more complex edits like reshaping specific objects or enhancing brightness, MGIE, short for MLLM-Guided Image Editing, combines the capabilities of multimodal language models to interpret user prompts and generate visual edits accordingly. For instance, a request to "make it more healthy" to a photo of a pepperoni pizza would add vegetable toppings, while instructing the model to "add more contrast to simulate more light" to a dark image of tigers in the Sahara results in a brighter picture.

The researchers behind MGIE emphasize its ability to translate explicit visual-aware intentions into effective image editing, offering a significant advancement in the field of vision-and-language research.

Image: Apple

Although Apple has made MGIE available for download on GitHub and provided a web demo on Hugging Face Spaces, its future applications beyond research remain undisclosed.

While other image generation platforms like OpenAI's DALL-E 3 and Adobe's Firefly AI model offer similar functionalities, Apple's entry into the generative AI space signifies its commitment to enhancing AI features across its devices, aligning with CEO Tim Cook's vision to integrate more AI capabilities into Apple products.

At Band of Coders, we understand the importance of leveraging cutting-edge technology to meet the evolving needs of our clients. Whether you're seeking innovative solutions for photo editing or exploring the potential of AI-driven applications, our team is here to assist you every step of the way. Contact us today to learn more about how we can help you achieve your goals.